Last updated on July 10th, 2021 at 08:55 am

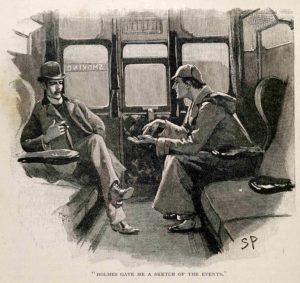

Gregory (Scotland Yard detective): “Is there any other point to which you would wish to draw my attention?”

Holmes: “To the curious incident of the dog in the night-time.”

Gregory: “The dog did nothing in the night-time.”

Holmes: “That was the curious incident.”

From this, Holmes deduces that the dog’s master was the villain. This is an example of looking for what isn’t there, and failing to notice it. It’s an example of absence blindness.

Original book illustration, courtesy Wikimedia Commons.

People and companies are developing technologies for assessing the nature and volume of technical debt borne by enterprise assets. The key word is developing. Some tools do exist, and they can be helpful. But they can’t do it all. Most assessments also rely on surveys and interviews of engineers and their managers. But these tools have limitations, too. Among these limitations is undercounting nonexistent debt items in surveys about technical debt.

It’s well known that survey results can exhibit biases. Collectively, these biases are known as response biases [Furnham 1986]. Sources of response bias include phrasing of questions, the demeanor of the interviewer, the desires of the participants to be good experimental subjects, attempts by subjects to respond with the “right answers,” selection of subjects, and more. These sources of bias are real, and we must address them when we design surveys.

Selection bias and absence blindness

But I have in mind here a set of biases more specific to technical debt. For example, when we ask subjects for examples of technical debt, they’re more likely to recall and provide examples of artifacts that exist than they are to provide examples of artifacts that don’t exist. This happens because of a cognitive bias called selection bias. The effect isn’t intentional, and it can dramatically skew results.

Selection bias is an example of a cognitive bias. In this case, selection bias acts to skew the data in such a way as to interfere with proper randomization, which ensures that the sample data doesn’t accurately represent the actual population of technical debt artifacts. Specifically, the data will tend to under-represent technical debt artifacts that don’t exist. Related phenomena are absence blindness and survivorship bias.

For example, regression testing is an essential step used in refactoring systems. When regression tests are unavailable, and we try to refactor a system to retire some of its technical debt, we face a problem. We can’t be certain that we haven’t changed something important. And so, when a survey design doesn’t mitigate the effects of selection bias, we can expect an elevated probability of failing to note any missing regression tests.

Mitigating the risk of undercounting nonexistent debt items

It’s helpful for surveys to include questions that specifically ask subjects to report technical debt items that don’t exist, but which would be helpful if they did exist—like missing regression tests. Even more helpful: conduct brainstorming sessions for engineers in which the goal is to list missing artifacts, tools, or processes that comprise technical debt precisely because they’re missing.

References

[Furnham 1986] Adrian Furnham. “Response bias, social desirability and dissimulation,” Personality and Individual Differences 7:3, 385-400, 1986.